Popular Now

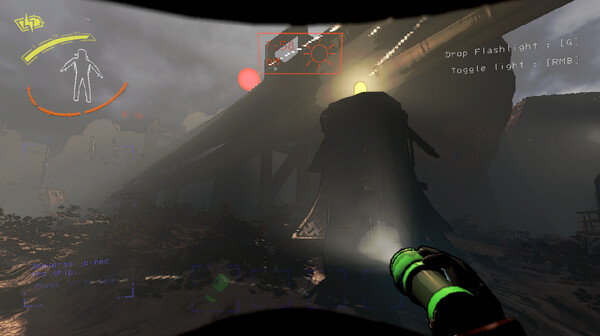

Copa City, hailed as a next-generation football simulation game, impressed players with vibrant urban settings, realistic crowds, and immersive street football dynamics. But behind the visuals and fluid gameplay lies a growing controversy surrounding one of the game’s most ambitious features: the AI-driven referee system.

Touted as a revolutionary mechanic to bring realism and unpredictability into street matches, the AI referee has sparked heated debates across the community. From inconsistent foul calls to controversial red cards and cultural bias in penalties, players have grown skeptical. This article explores the system’s evolution, the technical decisions behind it, and its implications on fairness, competition, and design in Copa City.

1. The Vision Behind the Referee AI

Before Copa City launched, the developers promised a new era of football realism. Unlike traditional games with fixed rule sets and predictable calls, Copa City aimed for something bolder: referees with personalities. Each match would feature a different AI with tendencies—some strict, others lenient, some aggressive or passive. This was meant to emulate real-world urban football, where the referee might be a retired player, a biased neighbor, or a random community figure.

Fans were intrigued. Developers showcased examples where referees allowed physical play in some matches but blew the whistle at the lightest contact in others. It felt dynamic and organic. But once released, this promising system quickly showed its flaws.

2. Launch Day Chaos: When AI Fairness Fell Apart

At launch, players immediately noticed strange referee behavior. In one viral clip, a player received a red card for celebrating too enthusiastically. In another, a clear foul went unnoticed, leading to a decisive goal. Rather than realism, the referee system felt random and unfair.

Competitive streamers and ranked players were hit the hardest. Poor AI decisions in critical matches caused outrage. Initially, developers dismissed the issues as isolated bugs. But it became clear this wasn’t an occasional glitch—it was a foundational problem with the system.

3. Understanding the AI Architecture

The Neural Net Behind the Whistle

Copa City’s referee AI isn’t rule-based—it’s powered by machine learning. Trained on thousands of real-life street football videos, the model interprets player actions, context, aggression, and intent to make decisions on fouls, penalties, and cards.

Data Bias in AI Training

But the model was heavily influenced by its training data. Most footage came from Latin America and Southern Europe. Cultural differences in physicality weren’t balanced. A shoulder nudge seen as “normal” in one region might be “reckless” in another. This created inconsistent refereeing based on the match's in-game location.

4. Emergence of AI "Personalities"

Copa City further complicated things by giving referees “personality profiles.” Some were stricter, some more relaxed, others even erratic. While this added depth, it conflicted with player expectations, especially in competitive play.

There was no way to know which referee type you’d get before a match. One might let multiple fouls slide, then send you off for a minor push. What was intended as realism turned into chaos, making matches feel unpredictable and unfair.

5. The Rise of "Ref Farming" Tactics

Abusing the AI’s Patterns

Players eventually began learning the referee AI patterns. Some used this knowledge to adjust playstyles, a strategy dubbed “ref farming.” If a ref was lenient on tackles, they played aggressively. If a ref penalized slides harshly, they avoided them.

Exploitation vs Adaptation

What started as clever adaptation became exploitation. Players dodged matches with strict referees. Some manipulated files to predict or change AI behavior. Ranked matches became more about working the system than playing football.

6. Player Feedback and Developer Silence

The community quickly turned vocal. Social media, Reddit, and forums filled with memes, complaints, and footage of bad calls. Players demanded a pre-match referee preview, an option to turn off personalities, or a complete AI rework.

But the developers responded slowly. During a live Q&A, one lead AI developer admitted they underestimated the impact of inconsistency. Still, no major update arrived for months. Many players lost hope for a real fix.

7. Psychological Impact on Player Behavior

The AI didn’t just affect gameplay—it affected how players thought. Fear of unfair calls led to cautious, defensive styles. Creativity and flair dropped. Matches lost the expressive, improvisational energy that Copa City was designed to showcase.

Worse, some players developed “ref trauma.” They avoided tackles or celebrations out of fear. The AI referee, designed to immerse players, instead constrained them mentally and emotionally.

8. The Competitive Scene Backlash

Referees in Tournaments

Copa City’s early esports tournaments featured the AI referee system intact. The result? Pandemonium. One finalist lost due to an uncalled handball, sparking massive backlash. Fans and teams demanded change.

Turning Off Ref AI in Pro Play

Eventually, organizers began disabling referee personalities in pro play. They forced matches to use a neutral referee or a rule-based system. Copa City’s core feature had to be removed for fair competition—a huge blow to the game’s identity.

9. Attempts at Reform: Patches and Public Betas

Under pressure, the developers rolled out updates. They rebalanced foul detection, reduced randomness, and introduced the “Referee AI Sandbox.” This let players test personalities and vote on improvements.

These were positive steps. Community testing helped refine the model, and new datasets offered more cultural balance. But for many competitive players, the damage was already done. Copa City had lost their trust.

10. What Copa City Teaches About AI and Fairness in Games

Copa City’s AI referee system was bold, even visionary. But it also revealed how AI-driven unpredictability can undermine fairness—especially in competitive environments. The lack of transparency made it difficult to adapt, and players felt powerless.

More broadly, Copa City teaches game developers and AI designers a critical lesson: realism must be balanced with clarity. When systems feel unfair or opaque, trust breaks down—even if the intention was immersion.

Conclusion

Copa City’s referee AI system was built with ambition. It aimed to recreate the chaotic beauty of real street football. Instead, it delivered frustration, inconsistency, and controversy. Rather than adding depth, it introduced randomness that hurt both casual and competitive players.

For the industry, this serves as a warning. AI systems in games need clear rules, balanced training, and predictable behavior—especially when tied to competition. Copa City’s failure wasn’t in the concept, but in its execution. It remains a valuable case study in the risks of using AI to simulate complex human judgment.